A Simple Keras Facial Keypoint Detector

Miles McBain

2017-09-19

Last updated: 2017-09-21

Code version: e4725b1

Creating a baseline Keras Model

I’m going to build a very basic keras model that can detect a single facial keypoint. Later I will generalise this to many keypoints.

Source and Wrangle a Training Set

Get the training set:

library(readr)

library(keras)

library(dplyr)

Attaching package: 'dplyr'The following objects are masked from 'package:stats':

filter, lagThe following objects are masked from 'package:base':

intersect, setdiff, setequal, uniontrain_set <- read_rds("../data/train_processed")

# Original data from: https://www.kaggle.com/c/facial-keypoints-detection

# Preprocessing done in /code/preprocess_kaggle_set.R

train_set <-

train_set %>%

filter(!is.na(left_eye_center_x), !(is.na(left_eye_center_y)))The images are 96x96 256 greyscale, represented in 9216 vector pixels.

Check out an image:

image(matrix(rev(train_set$image[[42]]), 96, 96), col = gray.colors(256))

Choose X (the image) and Y (a facial keypoint):

Scale X pixel values from 0 - 255 to 0 - 1:

X <- unlist(X) / 255

dim(X) <- c(nrow(train_set), 96*96)Scale Y values from 0 - 96 to 0 - 1:

Y_1 <- Y_1 / 96

Y_2 <- Y_2 / 96 Create a network model

Define a simple model with [256 -> 128 -> 2] with dropout layers that apparently ensure features are distinct. No convolutions yet.

model_input <- layer_input(shape = c(96*96), dtype = 'float32', name = 'image_input')

main_network <-

model_input %>%

layer_dense(units = 256, activation = 'relu', input_shape = c(96*96)) %>%

layer_dropout(rate = 0.4) %>%

layer_dense(units = 128, activation = 'relu') %>%

layer_dropout(rate = 0.3) %>%

layer_dense(units = 2, activation = 'relu')

output_y1 <-

main_network %>%

layer_dense(units = 1, activation = "linear", name = "output_y1")

output_y2 <-

main_network %>%

layer_dense(units = 1, activation = "linear", name = "output_y2")

model <- keras_model(

inputs = model_input,

outputs = c(output_y1, output_y2)

)

model Model

___________________________________________________________________________

Layer (type) Output Shape Param # Connected to

===========================================================================

image_input (InputLayer (None, 9216) 0

___________________________________________________________________________

dense_1 (Dense) (None, 256) 2359552 image_input[0][0]

___________________________________________________________________________

dropout_1 (Dropout) (None, 256) 0 dense_1[0][0]

___________________________________________________________________________

dense_2 (Dense) (None, 128) 32896 dropout_1[0][0]

___________________________________________________________________________

dropout_2 (Dropout) (None, 128) 0 dense_2[0][0]

___________________________________________________________________________

dense_3 (Dense) (None, 2) 258 dropout_2[0][0]

___________________________________________________________________________

output_y1 (Dense) (None, 1) 3 dense_3[0][0]

___________________________________________________________________________

output_y2 (Dense) (None, 1) 3 dense_3[0][0]

===========================================================================

Total params: 2,392,712

Trainable params: 2,392,712

Non-trainable params: 0

___________________________________________________________________________Compile model

model %>%

compile(loss = 'mean_squared_error', # MSE for continuous output

loss_weights = c(0.5, 0.5), # Weight both coords equally

optimizer = optimizer_adam())Fit model

fit_progress <-

model %>%

fit(x = X,

y = list(output_y1 = Y_1, output_y2 = Y_2),

epochs = 30,

batch_size = 128,

validation_split = 0.2)Visualise Model

plot(fit_progress)

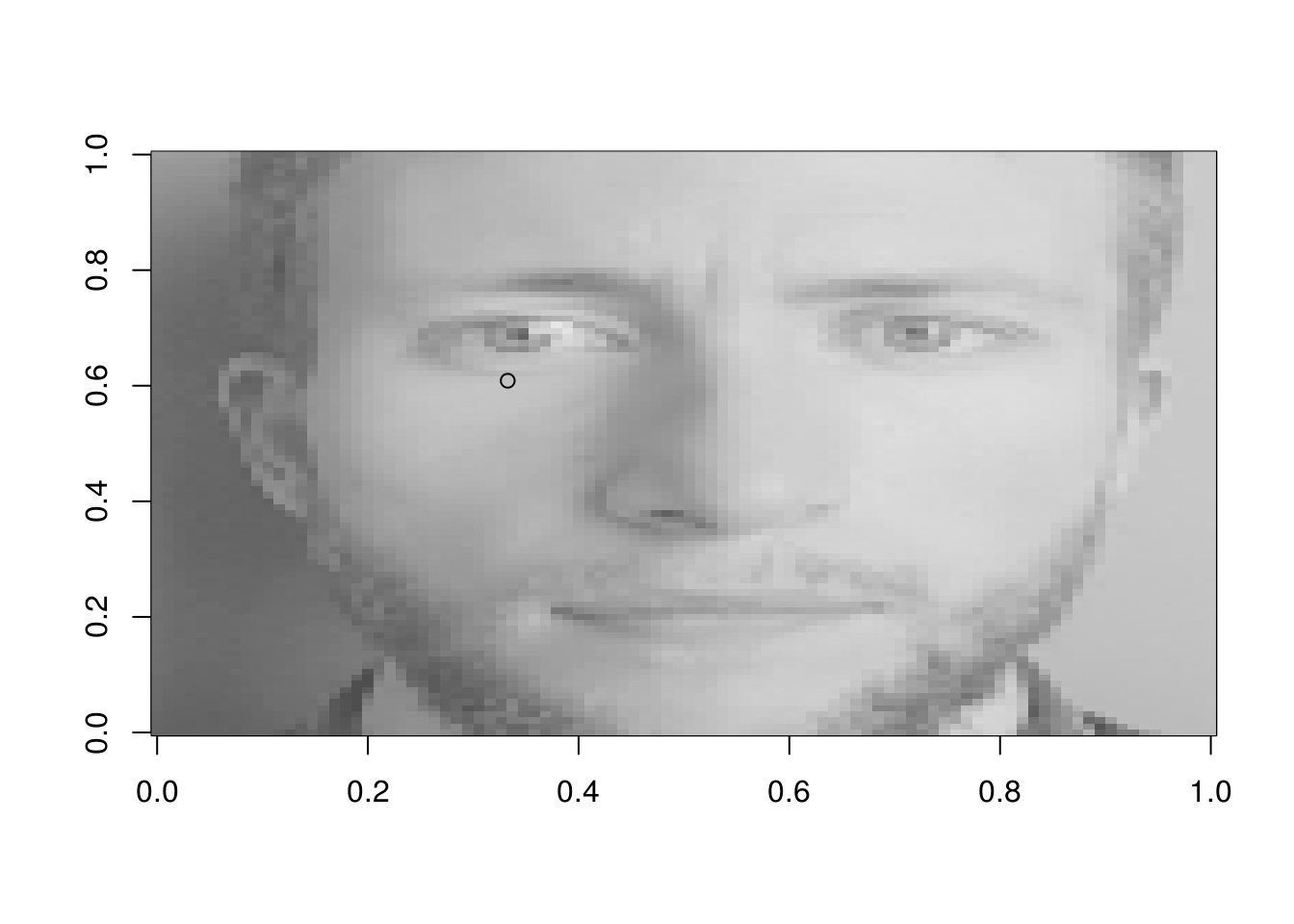

Predict my eye

miles_face <-

read_rds("../data/mm_face_vector.rds")

# A cropped square selfie.

# Preprocessing with magick in /code/preprocess_a_selfie.R

miles_face_matrix <-

miles_face %>%

`/`(256) %>%

matrix(nrow = 1, ncol = 96*96 )

eye_prediction <-

model %>%

predict_on_batch(miles_face_matrix)

image(matrix(miles_face %>% rev(), 96, 96) , col = gray.colors(256)) # Have to reverse image for upright viewing

points(x = 1- eye_prediction[[1]], y= 1- eye_prediction[[2]]) # Reversed image -> Take compliement of coord.

Not bad for a baseline model. Can I do better with convolutions? Most likely!

Session information

sessionInfo()R version 3.4.1 (2017-06-30)

Platform: x86_64-pc-linux-gnu (64-bit)

Running under: Ubuntu 17.04

Matrix products: default

BLAS: /usr/lib/libblas/libblas.so.3.7.0

LAPACK: /usr/lib/lapack/liblapack.so.3.7.0

locale:

[1] LC_CTYPE=en_AU.UTF-8 LC_NUMERIC=C

[3] LC_TIME=en_AU.UTF-8 LC_COLLATE=en_AU.UTF-8

[5] LC_MONETARY=en_AU.UTF-8 LC_MESSAGES=en_AU.UTF-8

[7] LC_PAPER=en_AU.UTF-8 LC_NAME=C

[9] LC_ADDRESS=C LC_TELEPHONE=C

[11] LC_MEASUREMENT=en_AU.UTF-8 LC_IDENTIFICATION=C

attached base packages:

[1] stats graphics grDevices utils datasets methods base

other attached packages:

[1] bindrcpp_0.2 dplyr_0.7.3 keras_2.0.6 readr_1.1.1

loaded via a namespace (and not attached):

[1] Rcpp_0.12.12 bindr_0.1 knitr_1.17 magrittr_1.5

[5] hms_0.3 munsell_0.4.3 colorspace_1.3-2 R6_2.2.2

[9] rlang_0.1.2 plyr_1.8.4 stringr_1.2.0 tools_3.4.1

[13] grid_3.4.1 gtable_0.2.0 git2r_0.19.0 htmltools_0.3.6

[17] tfruns_0.9.1 lazyeval_0.2.0 yaml_2.1.14 rprojroot_1.2

[21] digest_0.6.12 assertthat_0.2.0 tibble_1.3.4 tensorflow_1.3.1

[25] reshape2_1.4.2 ggplot2_2.2.1 glue_1.1.1 evaluate_0.10.1

[29] rmarkdown_1.6 labeling_0.3 stringi_1.1.5 compiler_3.4.1

[33] scales_0.5.0 backports_1.1.0 reticulate_1.1 jsonlite_1.5

[37] pkgconfig_2.0.1 This R Markdown site was created with workflowr